Seungwon Lim

Hi, I'm Seungwon Lim. I'm a researcher at Yonsei University, LangAGI (Language & AGI Lab) advised by Jinyoung Yeo. I received my bachelor's degree in Computer Science, and I am currently pursuing an integrated MS/PhD program in Computer Science.

Currently, I'm working as a research scientist intern at  , Exaone Lab. I am conducting research for making advanced foundation large language models.

, Exaone Lab. I am conducting research for making advanced foundation large language models.

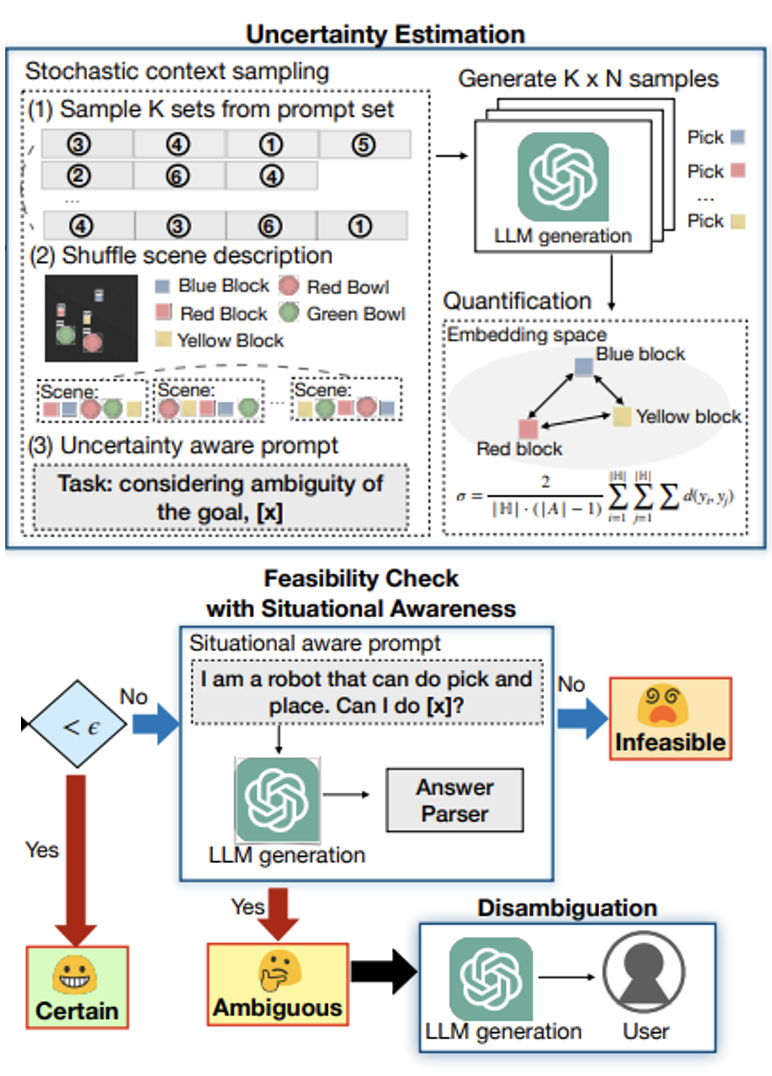

My research question centers on developing reliable agent systems. To achieve this, I am currently focusing on agent’s reasoning, action-decision, and human-centric AI.

Publications

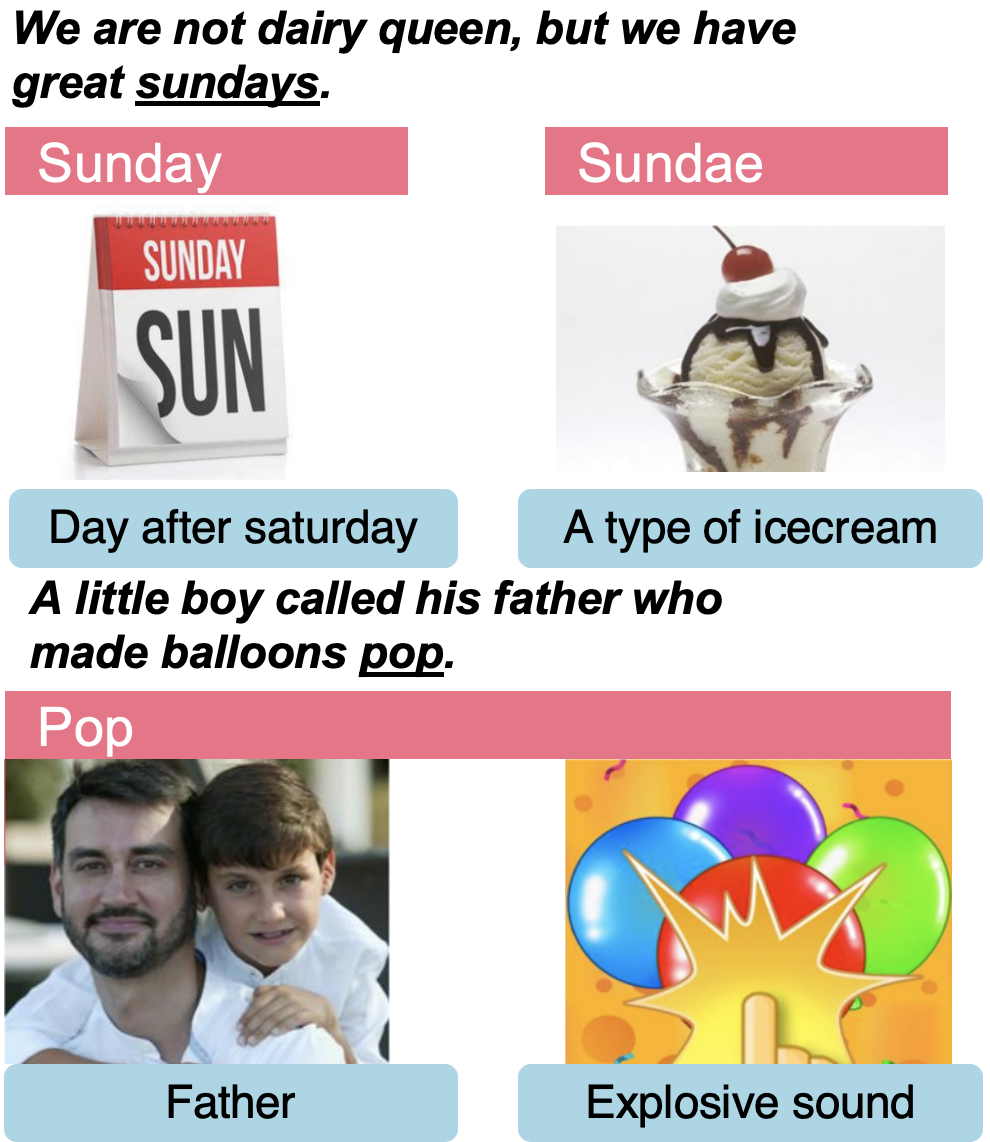

Do LLMs Have Distinct and Consistent Personality? TRAIT: Personality Testset designed for LLMs with Psychometrics

NAACL2025 Findings

TLDR; We introduce a psychometric-based benchmark TRAIT to measure the personality revealed in the Behavior Patterns of LLMs along with verification of Reliability and Validity.